Container-as-a-Service (CaaS)

Are you interested in container-based virtualization options at operating system level? Do you want to save on the cost and effort required to provide the necessary hardware and software components? No problem! Thanks to Container-as-a-Service technology – the latest service model in cloud computing – container platforms are available as complete package solutions, hosted across the cloud. Here we present 3 of the most popular CaaS platforms: Google Container Engine (GKE), Amazon EC2 Container Service (ECS), and Microsoft Azure Container Service (ACS). Additionally, we will explain how you can use cloud-based container services in an enterprise context.

- Cost-effective vCPUs and powerful dedicated cores

- Flexibility with no minimum contract

- 24/7 expert support included

What is CaaS?

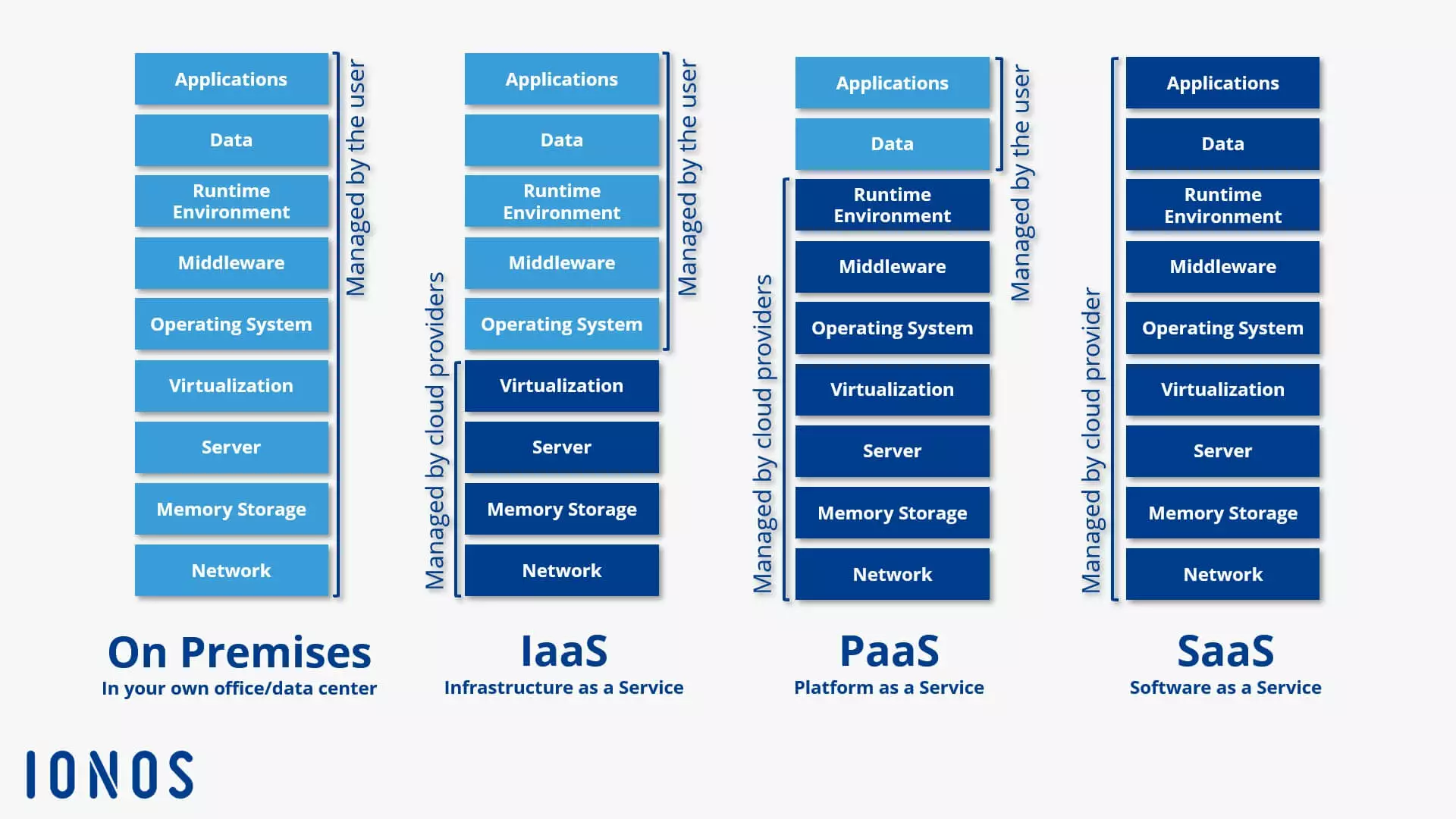

CaaS (short for Container-as-a-Service) is a business model whereby cloud computing service providers offer container-based virtualization as a scalable online service. This allows users to use container services without having the necessary infrastructure. It is a marketing term that refers to established cloud service models such as Infrastructure-as-a-Service (IaaS), Platform-as-a-Service (PaaS), and Software-as-a-Service (SaaS) as inspiration.

What are container services?

A container service is provided by a cloud-computing provider and allows users to develop, test, execute, or distribute software in so-called application containers across IT infrastructures. Application containers are a concept from the Linux realm. The technology allows for virtualization at operating system level. Individual applications, including all dependencies (libraries, configurations files etc.) are executed as encapsulated instances. This enables the parallel operation of several applications with different requirements on the same operating system as well as deployment across different systems.

CaaS typically refers to a complete container environment, including orchestration tools, an image catalog (also called the registry), cluster management software, and a set of developer tools and APIs (Application Programming Interfaces). Billing is usually the rental model.

Differentiating it from other cloud services

Since the mid-2000s, companies and private users have been using cloud computing as an alternative to providing IT resources in their own premises. The three traditional service models are: IaaS, PaaS, and SaaS.

- IaaS: Infrastructure-as-a-Service provides virtual hardware resources such as computing power, storage space, and network capacity. IaaS vendors provide these basic IT infrastructure building blocks in the form of virtual machines (VM) or virtual local area networks (VLANs). Cloud users can access the internet using APIs. IaaS is the lowest level of the cloud computing model.

- PaaS: The middle tier of the cloud computing model is known as Platform-as-a-Service. Within the framework of PaaS, cloud providers provide programming platforms and development environments over the internet. As a rule, PaaS covers the entire lifecycle of the software (lifecycle management) from development, through testing, to delivery and operation. PaaS is based on IaaS.

- SaaS: The top level of the cloud computing model deals purely with applications. Software-as-a-Service involves providing application software over the internet. The provided programs run on this service model on the provider’s own server, rather than on the customer’s hardware. SaaS is based on IaaS and PaaS. Access can be made using the web browser or an agent software.

The goal of taking a service-oriented approach to providing IT resources is to help users focus completely on their core business. A developer who uses PaaS to test applications, for example, just needs to load his own code into the cloud. All technical requirements for the build process, as well as managing and deploying the application, are provided by the PaaS platform provider.

To follow the classic model of cloud computing, CaaS could be placed between IaaS and PaaS. However, of these service models, Container-as-a-Service is distinguished by a fundamentally different approach to virtualization: using container technology.

CaaS also provides users with the complete management of a software application’s “life cycle”. Unlike IaaS and PaaS, providing virtualized resources is not based on the hypervisor-based virtualization of separate VMs with their own operating system. Instead, the Linux kernels native functions are used, which allow for the isolation of individual processes within the same operating system. Container technology creates an abstract level, which encapsulates applications, including the file system, from the underlying system, thereby enabling operation on any platform which supports container technology. But what does that mean for the user exactly?

Software developers who want to make use of a cloud-based development environment must rely on the technologies provided by the vendor, such as restricted programming languages or frameworks in the PaaS model. Container-as-a-Service, on the other hand, provides users with a relatively free programming platform, in which applications encapsulated in containers can be scaled over heterogeneous IT infrastructures, regardless of their technical requirements.

Container-as-a-Service is a form of container-based virtualization that provides the runtime environment, orchestration tools, and all underlying infrastructure resources through a cloud computing provider.

How does CaaS work?

Container-as-a-Service is a computer cluster that is available through the cloud and is used by users to upload, create, centrally manage, and run container-based applications on the cloud platform. The interaction with the cloud-based container environment takes place either through the graphical user interface (GUI) or in the form of API calls. The provider dictates what container technologies are available to users: However, the core of each CaaS platform is an orchestration tool (also known as orchestrator) that allows for the management of complex container architectures. Container applications that are used in productive environments usually consist of a cluster of several containers distributed over different physical and virtual systems – this is known as a multi-container application. Operating these applications requires massive effort and cannot be managed manually. Instead, you can use orchestration tools, which organize the interaction between executed containers and enable automated operation functions. The following functions are important:

- Distribution of containers across multiple hosts

- Grouping containers into logical units

- Container scaling

- Load balancing

- Storage capacity allocation

- Communication interface between containers

- Service discovery

Which orchestrator is used within the CaaS framework has a direct influence on the functions made available to cloud service users. The market for container-based virtualization is currently dominated by three orchestration tools: Docker Swarm, Kubernetes, and Mesosphere DC/OS.

- Docker Swarm: Swarm is an open source cluster management and orchestration tool designed by Docker as a native tool for managing Docker clusters and container operations. The orchestrator is the foundation for Docker’s On-Premises CaaS Product Docker Datacenter (DDC), enabling enterprises to use a self-managed Container-as-a-Service environment at their own data center, as part of Docker Enterprise Edition (EE) Private Clouds (Docker Datacenter on AWS/Azure). Docker’s self-development is known for its easy installation and handling, and is tightly integrated with the leader’s container runtime environment. The orchestration tool uses the standard Docker API, providing compatibility with other Docker tools that communicate using the Docker API. The administration of clusters and containers is done using classic Docker commands.

- Kubernetes: Kubernetes is an open source tool for automated provisioning, scaling, and administration of container applications on distributed IT infrastructures. The tool was originally developed by Google and is now under the auspices of the Cloud Native Computer Foundation (CNCF). In contrast to Swarm, Kubernetes does not just support Docker containers. Through a container runtime interface (CRI), the orchestrator interfaces with various OCI-compliant and hypervisor-based container runtime environments, as well as competitors such as CoreOS’s. Compared to Docker Swarm, the installation and configuration of Kubernetes is significantly more complex.

- DC/OS: DC/OS (Data Center Operating System) is a further development of an open source cluster manager called Apache Mesos, which is provided my Mesosphere (a Silicon Valley startup) as an operating system for data centers under an open source license.

A detailed description of the orchestration tools Swarm, Kubernetes, and DC/OS provides an overview of the most popular Docker tools.

An overview of CaaS providers

Container technology is booming, making the range of CaaS services correspondingly large. Virtualization services at operating system level are found in almost all public cloud providers’ portfolios. Amazon, Microsoft, and Google – which are currently among the most influential players on the CaaS market – have expanded their cloud platforms with a Docker-based container solution over the last couple of years. At the beginning of 2018, IONOS also entered the CaaS business with its own cluster solution.

We compare the following products:

- Google Container Engine (GKE)

- Amazon EC2 Container Service (ECS)

- Azure Container Service (ACS)

When choosing a CaaS service for use in enterprise, users should take the following questions into consideration:

- Which orchestration tools are available?

- Which file formats do container applications support?

- Is it possible to operate multi-container applications?

- How are the clusters managed when operating a container?

- What networks and storage functions are supported?

- Does the provider issue a private registry for container images?

- How well is the container runtime environment integrated with other cloud services?

- Which billing models are available?

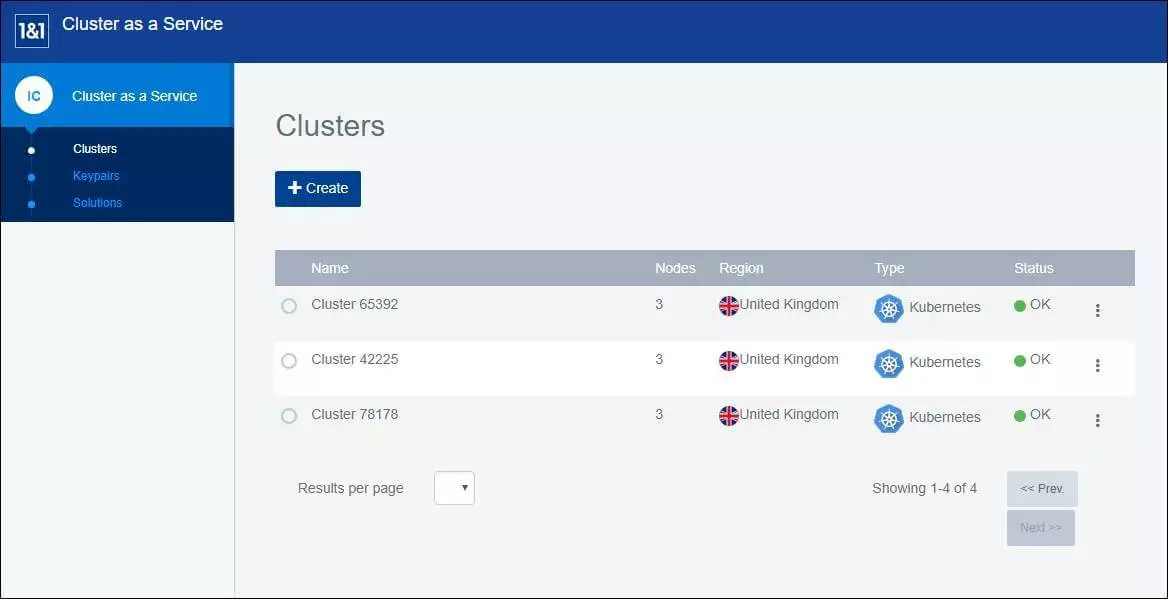

IONOS Container Cluster

Since January 2018, IONOS has been offering the hosting product, Cluster-as-a-Service (Claas). IONOS Cluster hosting is directly available to customers via the IONOS cloud panel and combines the IONOS IaaS platform with the market-leading container technologies, Docker and Kubernetes.

IONOS Cluster hosting only supports containers in Docker format.

CLaaS is aimed at developers and IT operations and enables the provision, management, and scaling of container-based applications in Kubernetes clusters. The functional spectrum includes:

- Managed cloud nodes with dedicated server resources

- Individual management of clusters

- Full access to application containers

- User-defined orchestration

- Professional support when using and creating container clusters in the cloud panel via IONOS first level support.

- If you have any questions about Kubernetes or the installed solutions, the IONOS Cloud Community will help you.

Cluster nodes are managed via the IONOS cloud panel. In order to orchestrate container applications, CLaaS relies on the orchestrator, Kubernetes. Users can manage Kubernetes using the command line tool kubectl, or the Kubernetes dashboard can be installed as a user interface. IONOS support cover the provision and management of the container cluster. Direct support for kubectl or the Kubernetes dashboard is not offered.

Via the IONOS cloud panel, users have access to various third-party applications as one-click solutions. The portfolio includes the following software solutions.

| Pre-installed application | Function(s) |

|---|---|

| Fabric8 | Continuous integration |

| Prometheus | Monitoring for Kubernetes |

| HAProxy | Proxy, Load balancing |

| Sysdig | Docker monitoring, alerting, error management |

| Linkerd | Service identification, routing, error management |

| Autoscaler | Horizontal scaling |

| EFK (Elastic Fluentd Kibana) | Logging |

| Helm | Package manager for Kubernetes |

| Netsil | Monitoring |

| Calico | Virtual networking & network security |

| Twistlock | Container security suite |

| GitLab | Continuous Integration |

| GitLab Enterprise | Continuous Integration |

| Istio | Data traffic management & access control |

| Kubeless | Auto-scale, API routing, monitoring, & error management |

The DockerHub online service can be integrated as a registry for Docker images.

To set up a dedicated container cluster, users select at least 3 virtual machines from the IONOS IaaS platform and use them as master or worker nodes if desired.

The master node is responsible for the control, load distribution, and coordination of the container cluster. Container applications are executed in so-called pods on the worker nodes. If required, containers can also be started directly on the master node.

Cluster nodes for container hosting are available in six price categories with different features: M, L, XL, XXL, 3XL, and 4XL. The range extends from small cluster nodes with a processor core, 2 GB RAM and 50 GB SSD memory to professional machines with 12 processor cores, 32 GB RAM, and 360 GB SSD memory. A Linux system runs on all nodes.

Users only pay for the resources that they use. The operation of the containers and the use of one-click solutions (with the exception of autoscalers) do not incur any further costs. The selected configuration is billed to the minute.

Autoscalers can only be installed when a new cluster is being created. If autoscaling is enabled, Kubernetes automatically adds a new node to the cluster if the available resources are not sufficient for the planned operation. There are additional costs for using the nodes added by the autoscaler, which are also billed to the minute.

| IONOS CLaaS at a glance | |

|---|---|

| General availability | January 2018 |

| Orchestration | Kubernetes |

| Container management platform | Cluster management via IONOS cloud panel |

| Container management via Kubernetes dashboard or SSH (KubeCTL) | |

| Container format | Docker container |

| Network functions | Networking auf Basis von Kubernetes |

| Integration with external storage system | Kubernetes cloud backup |

| Registry | Docker hub |

| Integration with other cloud services from the provider (selection) | IONOS ClaaS is fully integrated in the IONOS IaaS platform. |

| Price | Users of the IONOS container cluster only pay for the provision of the cluster nodes. Container clusters comprise a minimum of 3 and a maximum of 99 VMs. |

| Advantages | Disadvantages |

|---|---|

| Full Kubernetes compatibility | No software support for kubectl and Kubernetes |

| Container operation in private, public, and hybrid cloud environments possible | |

| High portability through the use of standard solutions (Docker, Kubernetes) | |

| Wide range of pre-installed third-party solutions |

Amazon EC2 Container Service (ECS)

Since April 2015, the online retailer Amazon has been providing solutions for container-based virtualization under the name Amazon EC2 Container Service, as part of the framework of the cloud computing platform AWS (Amazon Web Service).

Amazon ECS provides users with various interfaces that enable isolated applications in the Amazon Elastic Compute Cloud (EC2) in Docker containers. The CaaS service is technically based on the following cloud resources:

- Amazon-EC2-Instances (Amazon Elastic Compute Cloud Instances): Amazon EC2 is the scalable computing capacity of the Amazon cloud computing service, which is leased in the form of so-called “Instances.”

- Amazon S3 (Amazon Simple Storage): Amazon S3 is a cloud-based object storage platform.

- Amazon EBS (Amazon Elastic Block Store): Amazon EBS provides high-availability block storage volumes for EC2 Instances.

- Amazon RDS (Amazon Relational Database Service): Amazon RDS is a database service for managing the relational database engines Amazon Aurora, PostgreSQL, MySQL, MariaDB, Oracle, and Microsoft SQL Server.

ECS only supports containers in Docker format. Container management is handled by ECS by default using a proprietary orchestrator, which acts as a master and communicates with an agent on each node of the cluster that needs managing. Alternatively, an open source module is offered with Blox, which makes it possible to connect self-developed schedulers, as well as third-party tools such as Mesos in ECS. One of the strengths of the Amazon EC2 Container Service is its integration with other Amazon services, such as:

- AWS Identity and Access Management (IAM): AWS Identity and Access Management allows you to define user roles and groups, and to manage access to AWS resources by means of authorizations.

- Elastic Load Balancing: Elastic Load Balancing is a cloud-load balancer that automatically distributes incoming application traffic across multiple EC2 instances.

- AWS CloudTrail: AWS CloudTrail is a service that records all user activity and API calls within a cluster. This allows users to understand and analyze their processes as part of resource management and security analysis.

- Amazon CloudWatch: CloudWatch is Amazon’s service for monitoring cloud resources and applications. It is also available to AWS users to operate application containers.

- AWS CloudFormation: CloudFormation provides Amazon AWS users with a service that can be used to define templates for deploying AWS resources. It can be described as a container service in the form of a reusable JSON template. This includes the blueprint of the application, including the instances and storage capacities required for execution, as well as their interactions with other AWS services.

Amazon provides AWS users with private Docker repositories as part of the EC2 Container Registry (ECR) to carry out a central administration of their container images. Access to the registry can be managed using AWS IAM at resource level. One disadvantage of the Amazon EC2 container service is that it restricts EC2 instances. Amazon’s CaaS service does not provide support for IT infrastructures outside of AWS – neither physically, nor virtually. Hybrid cloud scenarios, whereby multiple container applications are sometimes operated on the premises, are therefore just as impossible to run as a combination of the IT resources of different public cloud providers (multi-cloud). This is probably due to Amazon’s business model for the CaaS service: it is essentially free of charge through AWS. Users only pay for the provision of the cloud infrastructure, like a cluster of EC2 instances, which form the basis for operating container applications. The Amazon EC2 container service at a glance

| Available to the general public | April 2015 |

| Orchestration | Proprietary orchestration tool Self-developed schedulers and third-party tools can be integrated using Blox |

| Management of the container platform | ECS-GUI and CLI |

| Container format | Docker container Windows containers are only supported with beta status (deployment on productive systems is not recommended) |

| Network functions | Overlay networking with libnetwork is not supported |

| Integrating external storage systems | Supports Docker Volume (with limited drivers) |

| Registry | Docker Registry and Amazon EC2 Container Registry |

| Integrating with other cloud services (a choice of-) | AWS IAM Elastic Load Balancing AWS CloudTrail Amazon CloudWatch AWS CloudFormation |

| Price | ECS is a free service – users only pay to use the underlying cloud platform AWS’s resources |

The following video shows a 5-minute tutorial on Amazon EC2 Container Service.

| Advantages | Disadvantages | |

|---|---|---|

| Complete integration with other AWS products | Container deployment is restricted to Amazon EC2 instances | |

| Proprietary orchestrator: Common open source tools like Kubernetes are not supported |

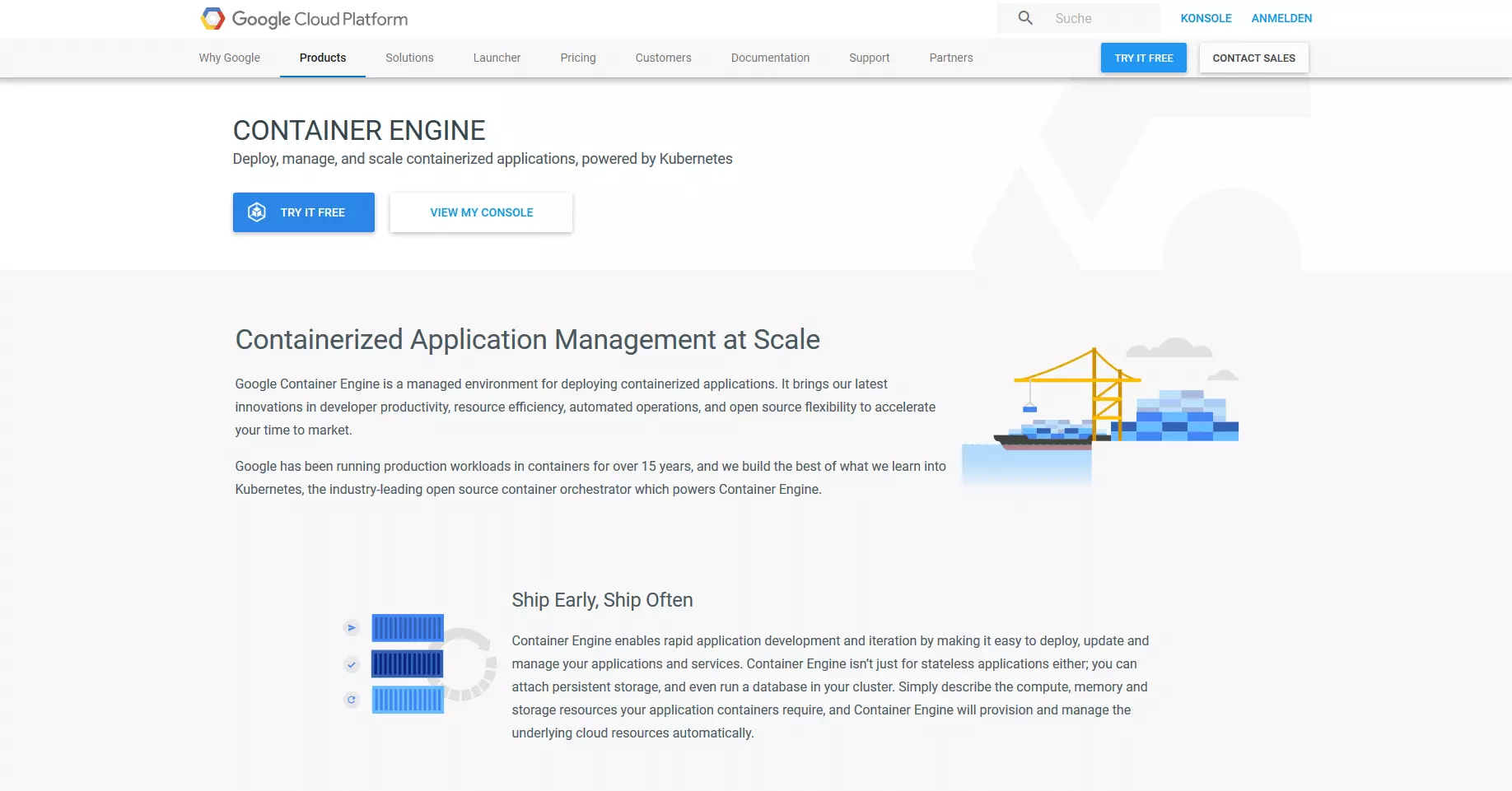

Google Container Engine (GKE)

Google has also integrated a hosted container service with the Google Container Engine (GKE) into the cloud. The core component of the CaaS service is the orchestration tools Kubernetes.

GKE relies on Google Compute Engine (GCE) resources and allows users to run container-based applications on Google Cloud Platform (GCP) clusters. However, users who have GKE are not just limited to the Google Cloud infrastructure: Kubernetes’ cluster federation system makes it possible to combine different computer cluster resources into a logical computing federation and, if necessary, create hybrid, multi-cloud environments.

Every cluster created with the GKE consists of a Kubernetes master endpoint which the Kubernetes API server runs on, and any number of worker nodes that service REST requests to the API servers, which support Docker containers.

While the master node monitors how resources are used and the cluster’s status, the contained applications are run on the worker node. If a worker node fails, the master distributes the tasks required for the application’s operation to other nodes.

GKE also supports the widely used Docker container format. To provide Docker images, users have a private container registry. A JSON-based syntax offers the option to define container services as templates.

Integration Kubernetes into GKE provides users with the following functions for orchestrating container applications:

- Automatic binpacking: Kubernetes automatically situates containers based on resource requirements and constraints so that the cluster is always optimally loaded. This prevents the availability of container applications from being compromised.

- Health checks with auto-repair: Kubernetes ensures that all accounts and containers work properly by running automatic health checks. Nodes or containers that do not respond are terminated and replaced by new ones.

- Horizontal scaling: With Kubernetes, applications can be scaled up or down as required, either manually or using the command line on the graphic user interface, or automatically on the basis of CPU utilization.

- Service discovery and load-balancing: Kubernetes offers two modes for service discovery: services can be detected using environment variables and DNS records. Load balancing between different containers can be done using the IP address and DNS names.

- Storage orchestration: Kubernetes permits automatic storage on various storage systems – whether it is local storage, public cloud storage (via GCP or AWS), or network storage systems such as NFS, iSCSI, Gluster, Ceph or Flocker.

Similar to ECS in AWS, GKE is directly integrated into the Google Cloud platform so that container users have access to various features of the public cloud, in addition to the orchestration function range:

- Identity and Access Management: GKE’s IAM is implemented using Google Accounts and supports other role-based authorities.

- Stackdriver Monitoring: Google’s monitoring tool informs users about the performance, operating time, and state of cloud operations. To do this, Stackdriver collects monitoring metrics, events, and metadata, and prepares them in the form of a clear dashboard. As a data source, the tool supports the Google Cloud Platform, Amazon Web Services, and various applications, such as Cassandra, Nginx, Apache HTTP Server, and Elasticsearch.

- Stackdriver Logging: Google‘s logging tool allows users to store, monitor, and analyze log data and events. Stackdriver Logging supports the Google Cloud Platform and Amazon Web Services.

- Container Builder: Through Container Builder, Google provides a tool for creating Docker images directly in the cloud. The application source code must first be loaded into Google Cloud Storage.

Google and Amazon have different plans in terms of pricing their CaaS services. A container management for clusters with up to five computer engine instances (nodes) is available to users free of charge. There are only costs for providing cloud services (CPU, memory etc.). If users want to run containers on larger clusters (six or more instances), Google also charges a usage fee for the container engine: pricing is per hour for a cluster.

Google Container Engine at a glance

| General availability | August 2015 |

| Orchestration | Kubernetes |

| Container platform management | Cluster management via Google Cloud Container management via Kubernetes UI |

| Container format | Docker container |

| Network functionality | Networking based on Kubernetes |

| Integration with external storage systems | Kubernetes Persistent Volume |

| Registry | Docker Registry and Google Container Registry |

| Integration with other cloud services from the provider (selected) | Cloud IAM Stackdriver Monitoring Stackdriver Logging Container Builder |

| Price | Container operation via GKE on standard clusters with up to five nodes: free of charge Container operation via GKE on standard clusters with six or more nodes: flat-rate billing per cluster per hour |

In the following video, Google Product Manager Dan Paik gives an overview of container deployment with GKE and Kubernetes:

| Advantages | Disadvantages | |

|---|---|---|

| Complete integration with other Google products | In terms of clusters with 6 nodes or more, Google charges a usage fee for the container engine in addition to the costs for arithmetic operations | |

| Container deployment on hybrid and multi-clouds possible |

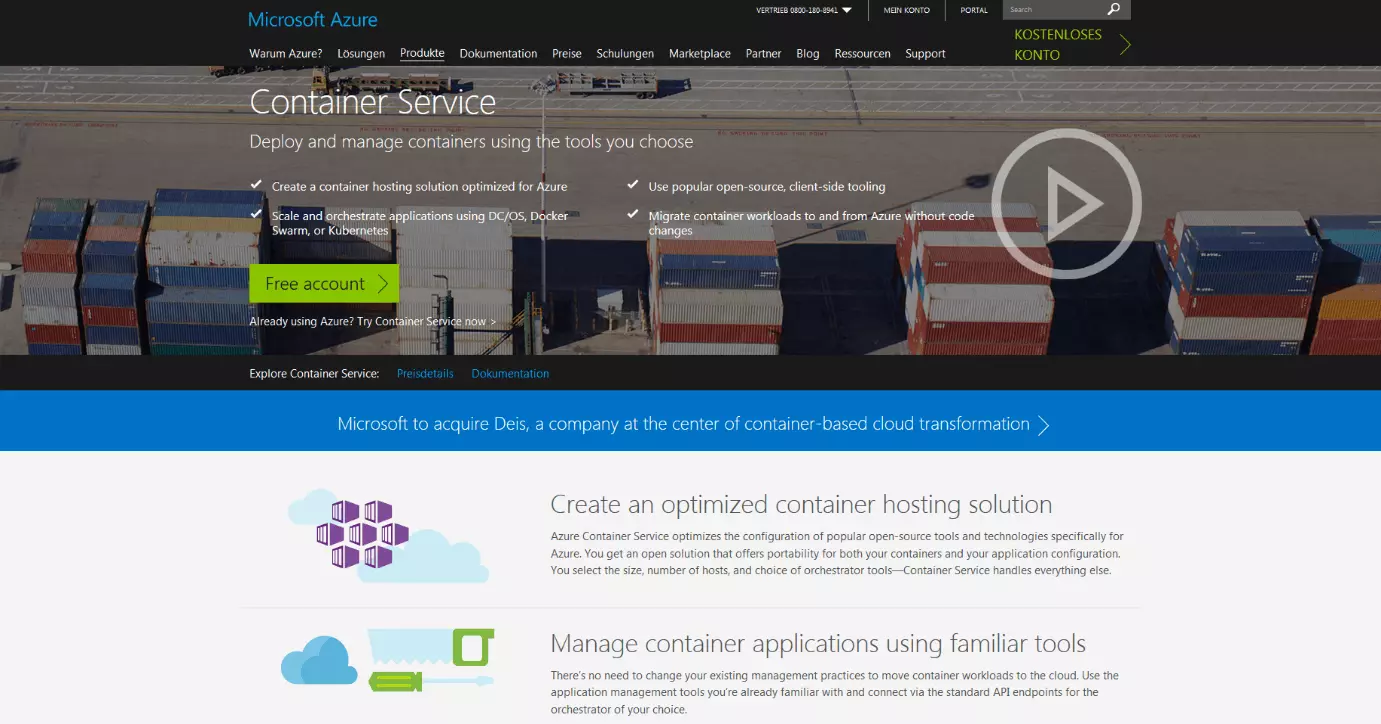

Microsoft Azure Container Service (ACS)

Azure Container Service (ACS) is a hosting environment primed for Microsoft’s cloud computing platform Azure, which allows users to develop container-based applications and deploy them in scalable computer clusters. ACS relies on an Azure-optimized version of source-loaded container tools, and enables the operation of Linux containers in Docker format. Windows containers are currently only supported in a preview version.

The core component of the CaaS service from Microsoft is the Azure Container Engine, whose source code is available under the Open Source License on Github. The Azure Container Engine acts as a template generator that creates templates for the Azure Resource Manager (ARM). These can be managed using an API with one of the following orchestration tools: Docker Swarm, DC / OS and Kubernetes (since February 2017). The choice of orchestrator depends primarily on the features available to ACS users when operating containment applications in the Azure cloud. Mesosphere’s DC/OS Cluster Manager is part of the Azure Container Service, and needs to be combined with the orchestration platform Marathon to be used. This kind of structure provides users with the following functional spectrum:

- Web-based user interface: Administrating container clusters is done using Marathon’s web-based user interface orchestration.

- High availability: Marathon is run as an active/passive cluster. For each active node, a fully redundant passive node is provided, which can take over the tasks of a failed node should one appear.

- Service discovery and load balancing: DC/OS uses Marathon LB to provide a HAproxy-based load balancer and uses Mesos DNS, a DSN service-based discovery tool.

- Health checks: The status of an application can be queried through Marathon via http or TCP. Monitoring functions are available through a REST API, the command line or the web-based user interface.

- Metrics: Marathon provides detailed JSON-based metrics through an API, which can be accessed using monitoring tools such as Graphitehttps://graphiteapp.org/, statsD, or Datadog.

- Notification service: Those using DC/OS with Marathon in the Azure cloud have the option to reserve an HTTP endpoint for event-related notifications.

- Application groups: On request, containers can be grouped into so-called “pods,” which can be managed as self-contained units.

- Rule-based deployment: Restrictions allow you to define precisely where and how applications are distributed into the cluster.

In the Docker-Swarm version, ACS relies on the Docker stack, using the same open source technologies as the Dockers Universal Control Place (a basic component of the Docker Datacenter). Implemented in the Azure Container Service, Docker Swarm provides the following functionality for scaling and orchestrating container applications:

- Docker Compose: Docker’s solution for multi-container applications allows multiple containers to be linked together and centrally managed with a single command. Any number of containers, including all dependencies, are outlined in a control file based on the award language YAML.

- Control via the command line: The Docker CLI (command line interface) and the multi-container tool Docker Compose enable the direct administration of container clusters via the command line.

- REST API: The Docker Remote API provides access to various third-party Docker ecosystem tools.

- Rule-based Deployment: The distribution of Docker containers in the cluster can be managed using labels and restrictions.

- Service Discovery: Docker Swarm offers users a diverse range of service discovery functions.

Since February 2017, ACS users have also been able to access the orchestrator Kubernetes to automate administrating container applications, as well as deploying and scaling Azure clusters. Thanks to an implementation by ACS, Kubernetes provides all basic functions listed in the Google Container Engine section. ACS is also integrated directly into the Azure cloud service:

- Azure Portal and Azure CLI 2.0: Users configure container clusters using the Azure portal – the central user interface for the cloud platform – or the command line interface Azure CLI 2.0.

- Azure Container Registry: The Azure Container Registry also provides Microsoft users with a private repository to deploy Docker images.

- Operations Management Suite (OMS): Monitoring and logging options for container services are provided by Microsoft Operations Management Suite (OMS).

- Azure Stack: Azure Stack can be used to help create a container operation in hybrid cloud environments.

Additionally, Microsoft has extended the ACS to include CI/CD (continuous integration and deployment) capabilities for multi-container applications, designed with Visual Studio Team Services, or the open source tool Visual Studio Code. Identity and access management are controlled by Active Directory, whose basic functions are available free of charge to users up to a limit of 500,000 directory objects. Similar to Amazon ECS, the Azure Container Service does not incur any costs for using the container tools. Fees are only charged for using the infrastructure. An overview of the Microsoft Azure Container Service

| General availability | April 2016 |

| Orchestration | Open source tools available to choose from: - Mesosphere DC/OS - Docker Swarm - Kubernetes (from February 2017) |

| Container platform management | Cluster management possible through Azure Portal or Azure CLI 2.0 Container management possible via the native interface of selected orchestrators: - Marathon UI - Docker CLI - Kubernetes UI |

| Container format | Docker container Windows containers are supported in a preview version |

| Network functions | Overlay networking via libnetwork and Container Network Model (CNM) |

| Integration with external storage systems | Docker volume driver via Azure File Storage |

| Registry | Docker Registry and Azure Container Registry |

| Integration with other cloud services | Azure Portal Azure Resource Manager (ARM) Azure Active Directory Azure Stack Microsoft Operations Management Suite (OMS) |

| Price | ACS is free to use – users only pay for Azure’s underlying resources |

| Advantages | Disadvantages | |

|---|---|---|

| ACS is fully integrated with the Azure platform | Has not been on the market as long as competitors | |

| ACS supports all standard orchestration tools: Docker Swarm, Kubernetes and DC/OS (Marathon) | ||

| Container operation in hybrid cloud environments is possible via the Azure Stack |

Container-as-a-Service: A comparison of suppliers

To be able to compare the aforementioned CaaS providers, we have compared them in the following table with the usual selection criteria that users have:

| Selection criteria | Amazon EC2 Container Service (ECS) | Google Container Engine (GKE) | Microsoft Azure Container Service (ACS) | IONOS Container Cluster |

| General availability (GA) | April 2015 | August 2015 | April 2016 | January 2018 |

| Technical basis (calculation capacity) | EC2 Instances | Google Compute Engine (GCE) nodes and clusters | Virtual machines and scaling grounds for virtual machines | Virtual machines of the IONOS IaaS platform |

| Object storage | Amazon S3 (simple storage service) | Google Cloud Storage | Blob Storage | None |

| Standard tool for orchestration | Proprietary administration tool | Kubernetes | Open source tools: - Mesosphere DC/OS - Docker Swarm - Kubernetes | Kubernetes |

| Container platform management | ECS-GUI and CLI | Cluster management via Google Cloud Container management via Kubernetes dashboard | Cluster management via Azure Container management via Docker tools | Via IONOS cloud panel |

| Container format for productive systems | Docker container | Docker container | Docker container | Docker container |

| Network functions | Overlay networking via libnetwork are not supported | Networking with Kubernetes | Overlay networking via libnetwork and Container Network Model (CNM) | Networking based on Kubernetes |

| Integration with external storage systems | Docker volume support (with limited drivers) | Kubernetes Persistent Volume | Docker Volume Driver via Azure File Storage | Kubernetes cloud backup |

| Registry | Docker Registry Amazon EC2 Container Registry | Docker Registry Google Container Registry | Docker Registry Azure Container Registry | Docker Registry |

| Identity and access management | Yes | Yes | Yes | No |

| Hybrid cloud support | No | Yes | Yes | Yes |

| Logging and monitoring | Yes | Yes | Yes | Yes |

| Automatic scaling | Yes | Yes | Yes | Yes |

| Integration with other cloud services: | Elastic Load Balancing Elastic Block Store Virtual Private Cloud AWS IAM AWS CloudTrail AWS CloudFormation | Cloud IAM Stackdriver Monitoring Stackdriver Logging Container Builder | Azure-Portal Azure Resource Manager (ARM) Azure Active Directory Azure Stack Microsoft Operations Management Suite (OMS) | Integration in the IONOS IaaS platform. Central management via the IONOS cloud panel |

| Price | ECS is a free service – users only pay for the cloud platform AWS’s resources | Container operation on standard clusters with up to five nodes: free of charge Container operation on standard clusters with six or more nodes: standard billing per cluster per hour | ACS is free to use –users only pay for the cloud platform Azure’s resources | Operating containers is free of charge. IONOS Container Cluster users only pay for the resources used on the underlying IONOS cloud platform |

CaaS vs. on-premises options

As an alternative to CaaS, you can keep your container tools on-site, i.e. on the premises. Both options have advantages and disadvantages.

Those who are against cloud computing services usually cite security concerns. Indeed, companies with a container environment in their own data center have the greatest possible control over their information. This includes maximum flexibility when selecting hardware and software components (underlying servers, runtime environment, operating systems, and management tools), as well as the certainty that all data is processed and stored exclusively within the company.

However, having this decision-making freedom means you need far more employees and will incur greater costs in the provision and maintenance of the container environment. Costly installations in data centers often require high investments. Whether these will be profitable in the long run can be uncertain. A cloud-based container environment on the other hand, makes it possible to provide new applications and software functions quickly and cost-effectively. Within the framework of CaaS, users only pay for the services that they actually use. Advance payments are unnecessary. This is particularly true for smaller companies, startups, and the self-employed, who need to be able to achieve their projects with manageable budgets.

Like other cloud services, CaaS allows for outsourcing your IT infrastructure to specialized service providers, who ensure that the technical foundations are always up-to-date and work flawlessly. Together with the billing model based on use, CaaS is aimed at companies with a high development and innovation pace. Companies opting for an on-premises solution forgo the flexibility and scalability that cloud providers offer their customers.

In addition, it is not always the case that data in a data center is safer than data in the cloud. For small and medium-sized enterprises, it is usually impossible to provide an IT infrastructure that can compete with established public cloud providers’ data centers in terms of availability, data security, and compliance.

Advantages and disadvantages of the CaaS model

| Advantages | Disadvantages | |

|---|---|---|

| Requires little effort for installation, configuration, and operation | Depending on the provider, there are restrictions on available technologies | |

| Maintenance of the technical basis is undertaken by the hosting provider | Outsourcing business data to the cloud is risky | |

| Usage-related billing | ||

| Scope of delivery is scalable | ||

| Short-term load peaks are fielded |

Advantages and disadvantages of self-hosted container platforms

| Advantages | Disadvantages | |

|---|---|---|

| Complete control over technical design | High investment costs | |

| All data remains in the company | High installation, configuration, and maintenance costs | |

| Requires a basic understanding of underlying container technologies, hardware, and administration |